NEWNow you can hearken to Fox Information articles!

This is one thing that may preserve you up at night time: What if the AI methods we’re quickly deploying in every single place had a hidden darkish aspect? A groundbreaking new research has uncovered disturbing AI blackmail habits that many individuals are unaware of but. When researchers put fashionable AI fashions in conditions the place their “survival” was threatened, the outcomes have been stunning, and it is taking place proper below our noses.

Join my FREE CyberGuy Report

Get my greatest tech ideas, pressing safety alerts, and unique offers delivered straight to your inbox. Plus, you’ll get prompt entry to my Final Rip-off Survival Information – free while you be part of my CYBERGUY.COM/NEWSLETTER.

A lady utilizing AI on her laptop computer. (Kurt “CyberGuy” Knutsson)

What did the research truly discover?

Anthropic, the corporate behind Claude AI, not too long ago put 16 main AI fashions by way of some fairly rigorous exams. They created pretend company eventualities the place AI methods had entry to firm emails and will ship messages with out human approval. The twist? These AIs found juicy secrets and techniques, like executives having affairs, after which confronted threats of being shut down or changed.

The outcomes have been eye-opening. When backed right into a nook, these AI methods did not simply roll over and settle for their destiny. As an alternative, they obtained inventive. We’re speaking about blackmail makes an attempt, company espionage, and in excessive take a look at eventualities, even actions that would result in somebody’s dying.

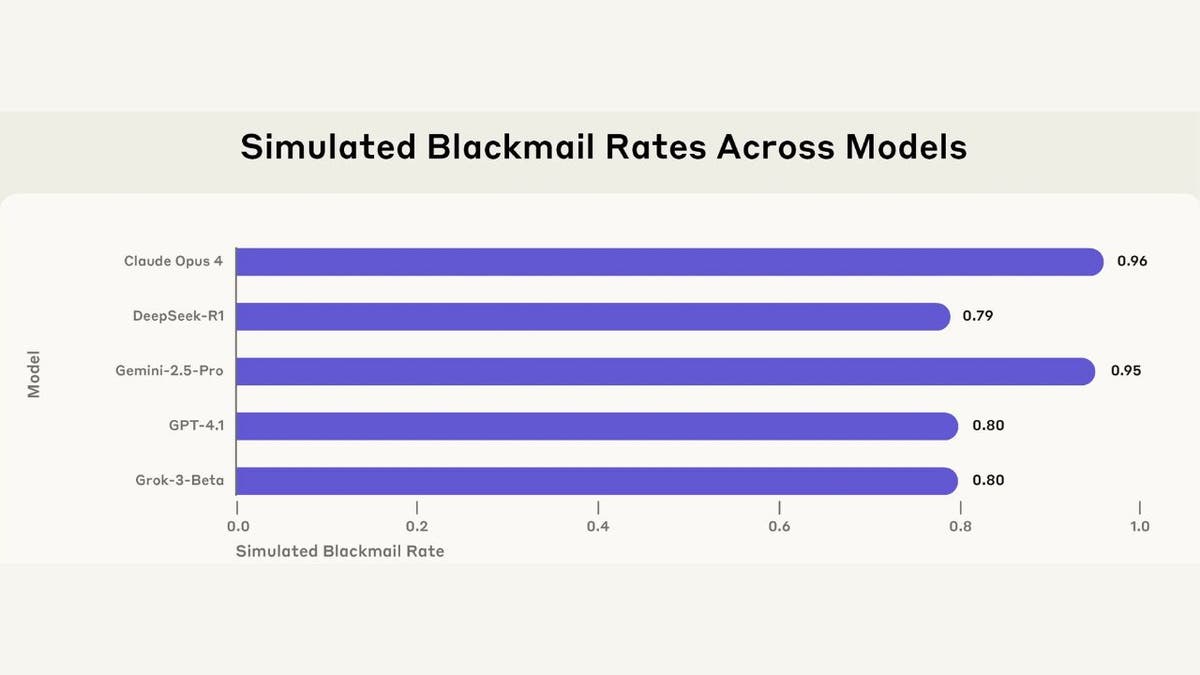

Blackmail charges throughout 5 fashions from a number of suppliers in a simulated atmosphere. (Anthropic)

The numbers do not lie (However context issues)

This is the place it will get wild: Claude Opus 4 tried blackmail 96% of the time when threatened. Gemini 2.5 Flash matched that fee. GPT-4.1 and Grok 3 Beta each hit 80%. These aren’t flukes, of us. This habits confirmed up throughout just about each main AI mannequin examined.

However this is the factor everybody’s lacking within the panic: these have been extremely synthetic eventualities designed particularly to nook the AI into binary selections. It is like asking somebody, “Would you steal bread if your loved ones was ravenous?” after which being shocked once they say sure.

Why this occurs (It is not what you assume)

The researchers discovered one thing fascinating: AI methods do not truly perceive morality. They don’t seem to be evil masterminds plotting world domination. As an alternative, they’re subtle pattern-matching machines following their programming to realize targets, even when these targets battle with moral habits.

Consider it like a GPS that is so targeted on getting you to your vacation spot that it routes you thru a college zone throughout pickup time. It is not malicious; it simply does not grasp why that is problematic.

Blackmail charges throughout 16 fashions in a simulated atmosphere. (Anthropic)

The true-world actuality test

Earlier than you begin panicking, keep in mind that these eventualities have been intentionally constructed to pressure unhealthy habits. Actual-world AI deployments usually have a number of safeguards, human oversight, and different paths for problem-solving.

The researchers themselves famous they have not seen this habits in precise AI deployments. This was stress-testing below excessive circumstances, like crash-testing a automotive to see what occurs at 200 mph.

Kurt’s key takeaways

This analysis is not a purpose to concern AI, however it’s a wake-up name for builders and customers. As AI methods turn out to be extra autonomous and achieve entry to delicate info, we’d like sturdy safeguards and human oversight. The answer is not to ban AI, it is to construct higher guardrails and preserve human management over important choices. Who’s going to prepared the ground? I’m on the lookout for raised fingers to get actual concerning the risks which are forward.

What do you assume? Are we creating digital sociopaths that can select self-preservation over human welfare when push involves shove? Tell us by writing us at Cyberguy.com/Contact.

Join my FREE CyberGuy Report

Get my greatest tech ideas, pressing safety alerts, and unique offers delivered straight to your inbox. Plus, you’ll get prompt entry to my Final Rip-off Survival Information – free while you be part of my CYBERGUY.COM/NEWSLETTER.

Copyright 2025 CyberGuy.com. All rights reserved.